A Year of bitPaintr

I can start by saying that I did not imagine the bitPaintr project doing as well as it did. And I have no problem thanking all the original backers once again - even though you are all probably tired of hearing it. But as a direct result of your support so many good things happened for me over the past year. I could tell you about all of them but that would make this post too long and too boring - so I will just concentrate on the two most significant things that resulted from this campaign.

The first is that I finally found my audience. Slowly at first, then more rapidly once the NPR piece aired, people started hearing about and reacting to my art. And the more people would hear about it, the more media would cover it, and then even more people would hear about it. And while not completely viral, it did snowball and I found myself in dozens of news articles, feature, and video pieces. Here is a list of some of my favorite. This time last year I was struggling to find an audience and would have settled for any venue to showcase my art. Today, I am able to pick and choose from multiple opportunities.

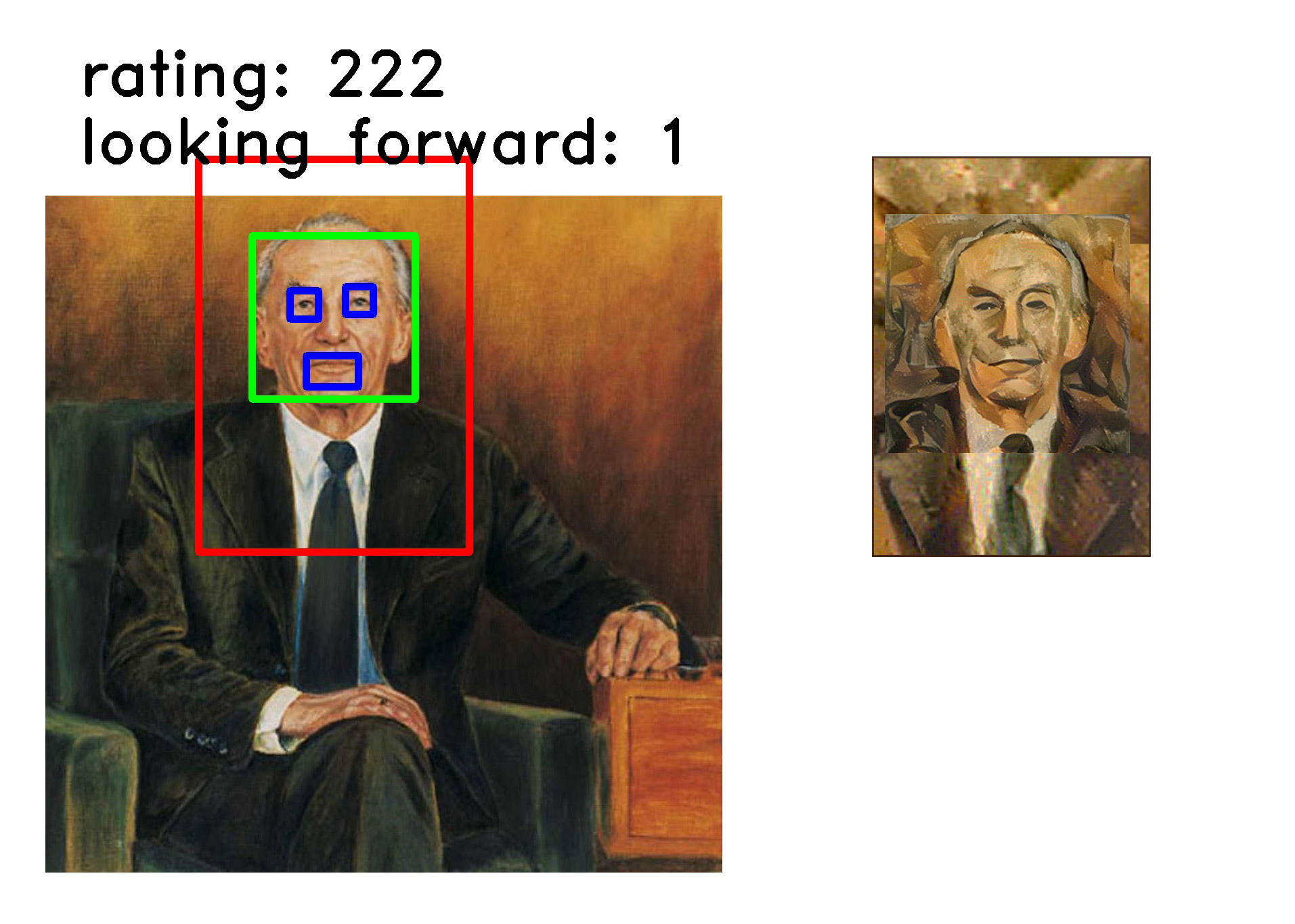

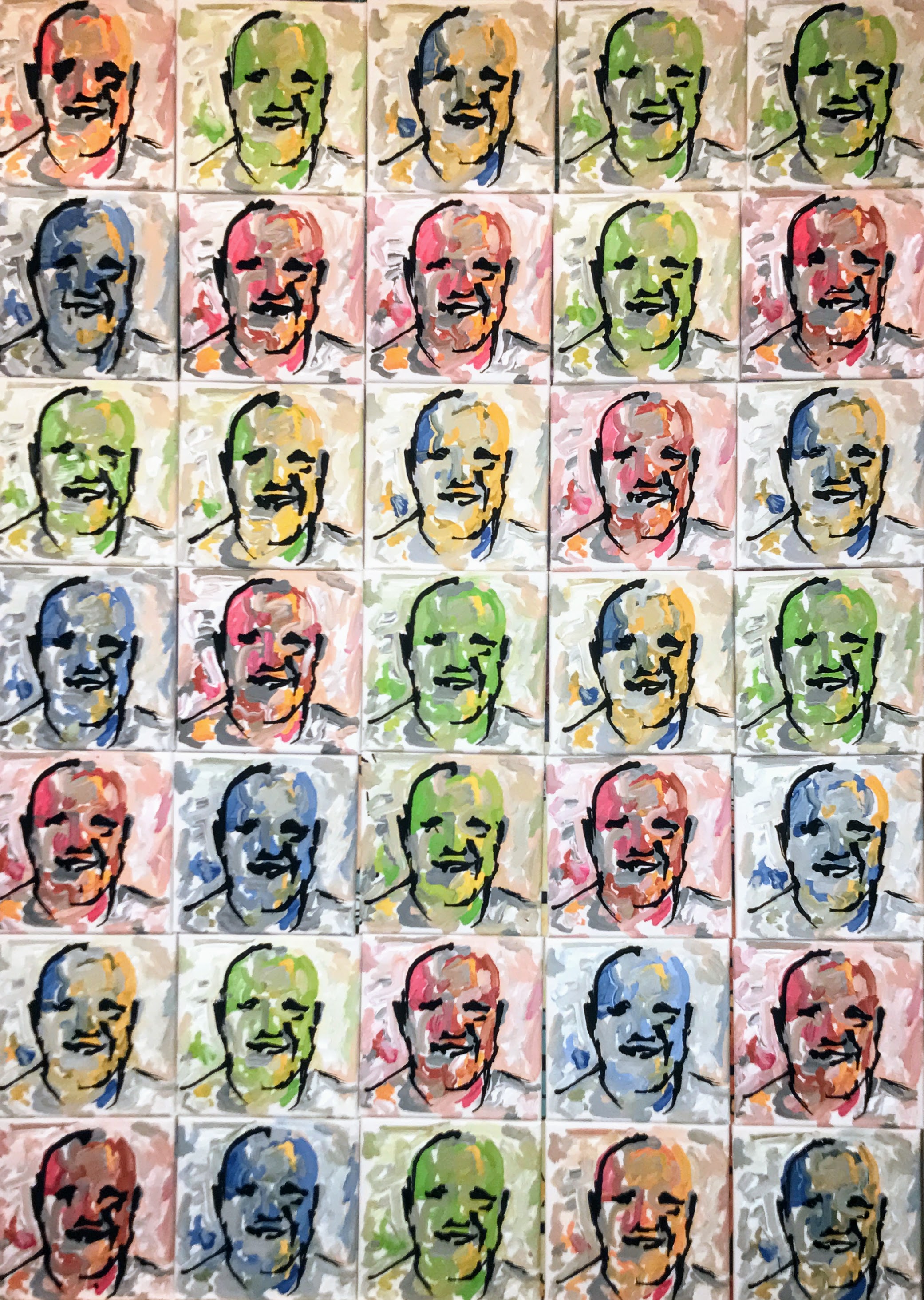

The second most significant part of all this is that I found my voice. Not sure I fully understood my own art before, well not as much as I do now. I had the opportunity to speak to, hang out with, and get feedback from you all, other artists, critics, and various members of the artificial intelligence community. All this interaction has lead me to realize that the paintings my robots produce are just artifacts of my artistic process. I once focused on making these artifacts as beautiful as possible, and while still important to me, I have come to realize that the paintings are the most boring part of this whole thing.

The interaction, artificial creativity, processes, and time lapse videos are where all the action is. In the past year I have learned that my art is an interactive performance piece that explores creativity and asks the sometimes trite questions of "What is Art?" and "What makes me, Pindar, an Artist?" - or anyone an artist. This is usually a cliche theme, and as such a difficult topic to address without coming off as pretentious. But I think the way my robots address it is novel and interesting. Well, at least I hope so.

Next Steps

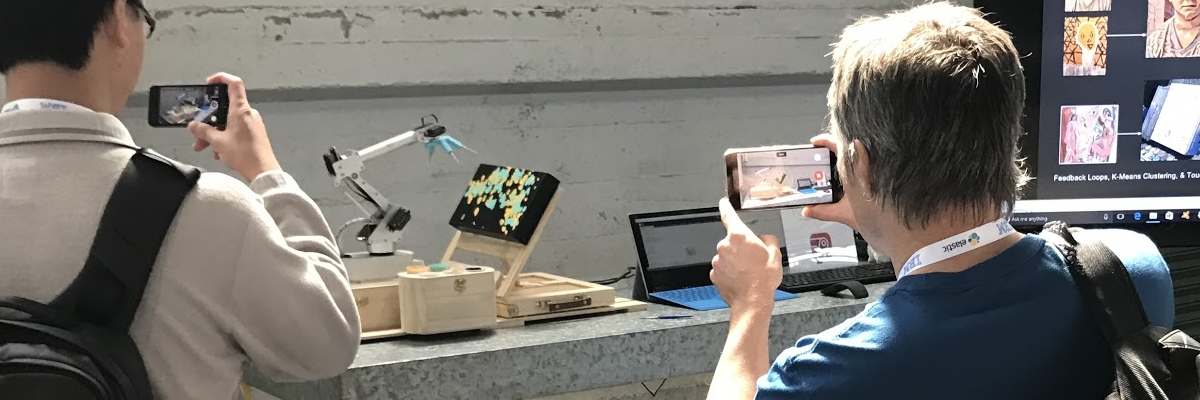

As I close up bitPaintr, I am looking forward to the next robot project called cloudPainter. Will begin by telling you the coolest part about the project which is that I have a new partner, my son Hunter. He is helping me focus on new angles that I had not considered before. Furthermore, our weekend forays into Machine Learning, 3D printing, and experimental AI concepts have really rejuvinated my energy. Already his enthusiasm, input, and assistance has resulted in multiple hardware upgrades. While the machine in the following photo may look like your average run-of-the-mill painting robot, it has two major hardware upgrades that we have been working on.