In art school they bang in the idea of Texture Texture Texture, which is of course hard to achieve with digital works. Layers and layers of overlays are a technique that I have found to achieve it.

SVG Backgrounds

One of the limitation of on-chain art, is file size. Everything looks pixelated. its an aesthetic, but also a challenge. Just made a bunch of files that will bring high resolution graphics to on-chain art. Will show more samples in the coming days.

Its a Spectrum

One of the things I like to explore is Free Will, and just how much of it we have. I have heard and read convincing arguments that we have none. That everything we do is a deterministic biochemical reaction. And the reflection that my robots do when creating this is similar. Many of the algorithms are deterministic, but some are not, and that is where things are interesting. The following are three images where I am trying to explore the concept visually from the first which is mostly in the realm of our souls, to the third, which attempts to have a purely digital look. We are probably somewhere between being run purely by our emotions and deterministic decisions, somewhere on this spectrum.

Feedback vs Reflection

Have called project Feedback for Last Year, but based on conversations with Ezra Shibboleth and Anirveda, now think a better name might be “REFLECTION”

Yikes - might be hard to make that into a visual you can read both ways like feedback…

Needed distraction so mocked something up real quick - kinda fun - maybe reflection is the name of the project, and feedback is the name of the robot?

Or maybe call it réflexion?

Developing an Aesthetic

2023 was spent experimenting and developing an aesthetic. 2024 will be spent executing on it and more importantly bringing the concepts of AI and the Blockchain a step forward. Months were spent experimenting and creating these images, but the code is incomplete and I am currently concentrating and codifying the creative process that produced these paintings.

The Monograph

One thing that I have been working on with a team of people for almost a year is a monograph. Monographs are books about an artist and their style, often self published for distribution to galleries and curators. Today for the first time I saw the finished book, and am humbled by its content. The people that have put this together with me are amazing, and I can wait to release it next month. Stay tuned for details, but until then a sneak peak…

Dualities

Feedback examines dualities.

Its paintings are a fight between Creativity and Logic.

It paints Expressively, while drawing Precisely.

Are its images coming from Emotion, or are they purely Deterministic.

And another duality it will be exploring is the Physical versus the Digital.

Our work together will exists on both painted canvases, and in purely digital 100% on-chain form.

You have seen the physical, the 100% on-chain version will be just as high quality - regardless of the cost.

A sneak peak of a detail:

Layer by Layer

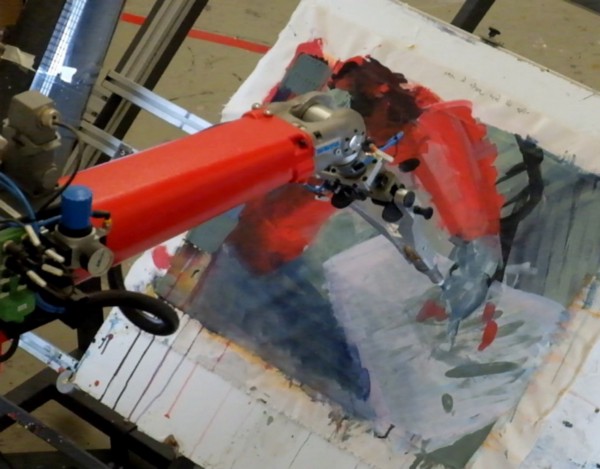

One approach to painting is to paint in layers, working the entirety of a canvas one layer at a time, increasing the detail and finish with each layer.

This is how I collaborate with Feedback.

Have taught it to paint with a creative process like my own. It works one layer at a time, and between each layer it looks at the canvas and ask itself what the next layer needs.

If its feeling expressive, it paints a brush. When it needs to be technical, it draws with a pen.

Pindar

Feedback

There are two branches of my art.

The critically acclaimed AI Faces, and the commercially successful bitGANs.

This is not unusual. Throughout Art History (my favorite example being Turner) artists sometimes follow two paths simultaneously. One that keeps their studio open by appealing to the average patron, and another that challenges their viewers. Both are important to the artists, but the second branch needs the support of the first to exist.

This has been my experience with bitGANs and AI Faces. The unexpected commercial success of the bitGANs has allowed me to expand and go deep with my exploration of AI Faces. I now have multiple robot arms with multiple paint heads in a dedicated studio as I set out to launch the Feedback Series in 2024.

I minted the first Feedback painting today, January 1, 2024. See it here on SuperRare. It is hard to describe other than it is the culmination of everything I have ever worked on. It is even a merging of the two branches I just described.

The bitGANs and AI Faces are coming together.

How exactly?

Be sure to follow along here and on all my new social media accounts as I spend 2024 decentralizing from Twitter.

Discord: discord.gg/72JSspz2hR

Warpcast: warpcast.com/vanarman

Twitter: twitter.com/VanArman

Instagram: instagram.com/vanarman_

Threads: threads.net/@vanarman_

Pindar

Introducing artonomous - a collaboration with Kitty Simpson

Launching into a big collaboration with photographer Kitty Simpson where we are going to make a couple hundred portraits together. We are calling the project artonomous and the goal is to bring my robots closer to creating fine art.

I’ll begin by revealing the logo, which I can’t believe I came up with. This is single-handedly the most creative thing I have ever made and alone makes this project worth while. It has four layers of meaning hidden in it making it perhaps the deepest piece of art I have ever created ;)

Beyond the logo, we are going in the opposite direction of most other A.I. Art projects. Instead of inhaling vast amounts of data to train my neural networks, we are going with a very curated set. We will still need hundreds (even thousands) of images to train my robots to see and paint faces, but all of them will be highly curated by Kitty Simpson.

Instead of gorging the networks with thousands and thousands of face photos taken by paparazzi like the famous CelebA dataset, we will be feeding my neural networks the refined photography of Kitty Simpson. Each portrait will be taken or directed by Kitty then sent into my robot’s creative mind. Once it has applied its A.I. to studying an analyzing the photo, it will paint a portrait.

Every eight or so paintings, the robot will study its own brushstrokes to improve its neural networks.

It will then attempt to paint an original face based on the highly curated photos and its own paintings.

Afterwards Kitty and I critique the work and reprogram the robot’s A.I. and neural networks to better interpret and render Kitty’s photography.

This cycle is repeated over and over again in a creative feedback loop as the robot refines its fine art portraiture.

We plan on doing about 256 portraits like this. Should be fun. The following is a timelapse of the first imagined portrait in the series…

Pindar

Artonomo.us - beginnings of a completely autonomous painting robot.

Artonomo.us is my latest painting robot project.

In this project, one of my robots

1: Selects it’s own imagery to paint.

2: Applies it’s own style with Deep Learning Neural Networks.

3: Then paints with Feedback Loops.

An animated GIF of the process is below…

Artonomo.us is currently working on a series of sixteen portraits in the style above. The portraits were selected from user submitted photos. It will be painting these portraits over the next couple weeks. When done it will select a new style and sixteen new user submitted portraits. If you would like to see more of these portraits, or submit yours for consideration, visit artonomo.us.

Below are the images selected for this round of portrait. We will publish the complete set when Artonomo.us is finished with it…

Pindar

NY Art Critic Jerry Saltz thinks my Painting Robots have Good Taste

New York Magazine's Art Critic Jerry Saltz recently reviewed several AI generated pieces of art including my own. The piece begins at 19:45 in the above HBO Vice video. I was prepared for the worst, but pleased when he looked at this Portrait of Elle Reeve and commented that my robots have "good taste” and that “It doesn’t look like a computer made it.” But he then paused and concluded "That doesn't make it any good." But I loved the review since a couple of years ago the art world did not even consider what I was doing to be art. At least now it is considered bad art. That's progress!

All the AI generated art got roasted, but at least I got what I considered to be the best review of all my colleagues which included some of the world's finest AI generated artwork.

Pindar

New Art Algorithm Discovered by autonymo.us

Have started a new project called autonymo.us where I have let one of my painting robots go off on its own. It experiments and tries new things, sometimes completely abstract, other times using a simple algorithm to complete a painting. Sometimes it uses different combinations of the couple dozens I have written for it.

Most of the results are horrendous. But sometimes it comes up with something really beautiful. I am putting the beautiful ones up at the website autonymo.us. But also thought I would share new algorithms discoveries here.

So the second algorithm we discovered looks really good on smiling faces. And it is really simple.

Step 1: Detect that subject has a big smile. Seriously cause this doesn’t look good otherwise.

Step 2: Isolate background and separate it from the faces.

Step 3: Quickly cover background with a mixture of teal & white paint.

Step 4: Use K-Means Clustering to organize pixels with respect to r, g, b, values and x, y coordinates.

Step 5: Paint light parts of painting in varying shades of pyrole orange.

Step 6: Paint dark parts of painting in crimsons, grays, and black.

A simple and fun algorithm to paint smiling portraits with. Here are a couple…

Augmenting the Creativity of A Child with A.I.

The details behind my most recent painting are complex, but the impact is simple.

My robots had a photoshoot with my youngest child, then painted a portrait with her in the style of one of her paintings. Straightforward A.I. by today’s standards, but who really cares how simple the process behind something is as long as the final results are emotionally relevant.

With this painting there were giggles as she painted alongside my robot and an amazed result when she saw the final piece develop over time, so it was a success.

The following image shows the inputs, my robot’s favorite photo from the shoot (top left) and a painting made by her (top middle). The A.I. then created a CNN style transfer to reimagine her face in the style of her painting (top right). As the robot worked on painting this image with feedback loops, she painted along on a touchscreen, giving the robot direction on how to create the strokes (bottom left). The robot then used her collaborative input, a variety of generative A.I., Deep Learning, and Feedback Loops to finish the painting one brushstroke at a time (bottom right).

In essence, the robot was using a brush to remove the difference between an image that was dynamically changing in its memory with what it saw emerging on the canvas. A timelapse of the painting as it was being created is below…

Pindar

Solo Exhibition and Robot Art Installation

From September 6th-29th, my painting robots will be on display in an installation in the Fashion Court of Tysons Corner Center. The exhibition will feature more than 20 paintings juxtaposing historic photographs with modern renditions of the same scene in 2018.

In addition to the work featuring the Tysons area, there will be multiple AI created pieces of art as well as interactive robotic demonstrations throughout the month. The robots will be running all month. Here is a list of some of the events...

Sept 6 : 9:30 am

Opening

Sept 15 : 11:00 am - 1:00 pm

Interactive Robotic Portraiture

September 22 : Time TBD

Artist’s Talk and Print Signing

September 23 : Time TBD

Presentation of Large Scale Skyline Painting

September 27 : Time TBD

Artist’s Talk

Download Full Press Release Here...

I will be at the installation for during the evenings for much of the event. Look forward to seeing everyone throughout the month and making a lot of paintings...

Pindar

Visions - Five Minute Piece on My Robot's Latest AI

Click on image or here to see recent piece about my robot's latest AI and how it uses artificial creativity to paint...

Pindar

Robot Art 2018 Award

For each of the past three years, internet entrepreneur Andrew Conru has sponsored a $100,000 international competition to build artistic robots called RobotArt.org. His challenge was to create beautiful paintings with machines and the only steadfast rule was that the robot had to use a brush... no inkjet printers allowed.

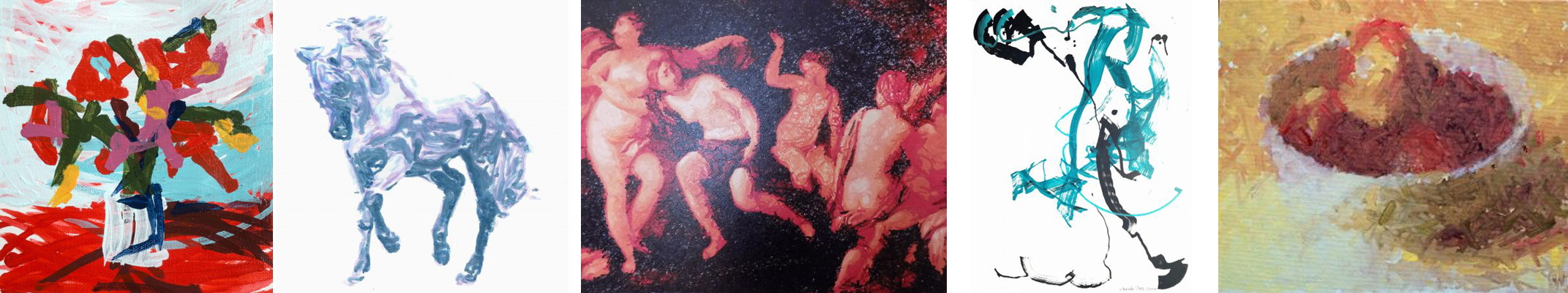

I have participated in each, and this year I was fortunate enough to be awarded the top prize. Here are three of the paintings I submitted. The first two pictured are AI generated portraits made with varying degrees of abstraction. The third is an AI generated study of a Cezanne masterpiece.

Second Place went to Evolutionary AI Visionary Hod Lipson, and third went to a team from Kasetsart University in Thailand.

Since the contest began more than 600 robotic paintings have been submitted for consideration by artist, teams, and Universities around the world including notable robots eDavid, A Roboto, TAIDA, and NORAA. The form of the machines themselves have included robot arms, xy-tables, drones, and even a dancing snake. The works were half performance and half end product. How and why the robots were painting was as an important part of the judging as what the paintings looked like. Videos of each robot in action were a popular attraction of the contest.

2016 Winner TAIDA painting Einstein

The style of the paintings was as varied as the robots. These five paintings from the 2018 competition show some of the variety. From left to right, these are the work of Canadian Artist Joanne Hastie, Japanese A Roboto, Columbia's Hod Lipson, Californian Team HHS, and Australian Artist Robert Todonai.

Like all things technology related recently, the contest also saw an increase in the amount of AI used by the robots each year.

In the first year only a couple of teams used AI including e-David and myself. In the self portrait on the left, e-David used feedback loops to watch what it was painting and make adjustments accordingly, refining the painting by reducing error one brush stroke at a time.

While AI was unusual when the contest began, it has since become one of the most important tools for the robots. Many of the top entries, including mine, Hod Lipson's, and A Roboto used deep learning to create increasingly autonomous generative art systems. For some of the work it became unclear whether the system was simply being generative, or whether the robots were in fact achieving creativity.

This has been one of my favorite projects to work on in recent years. Couldn't be happier than to have won it this year. If you are interested in seeing higher resolution images of my submissions as well as three that are for sale, you can see more in my CryptoArt gallery at superrare.co.

Channelling Picasso and Braque

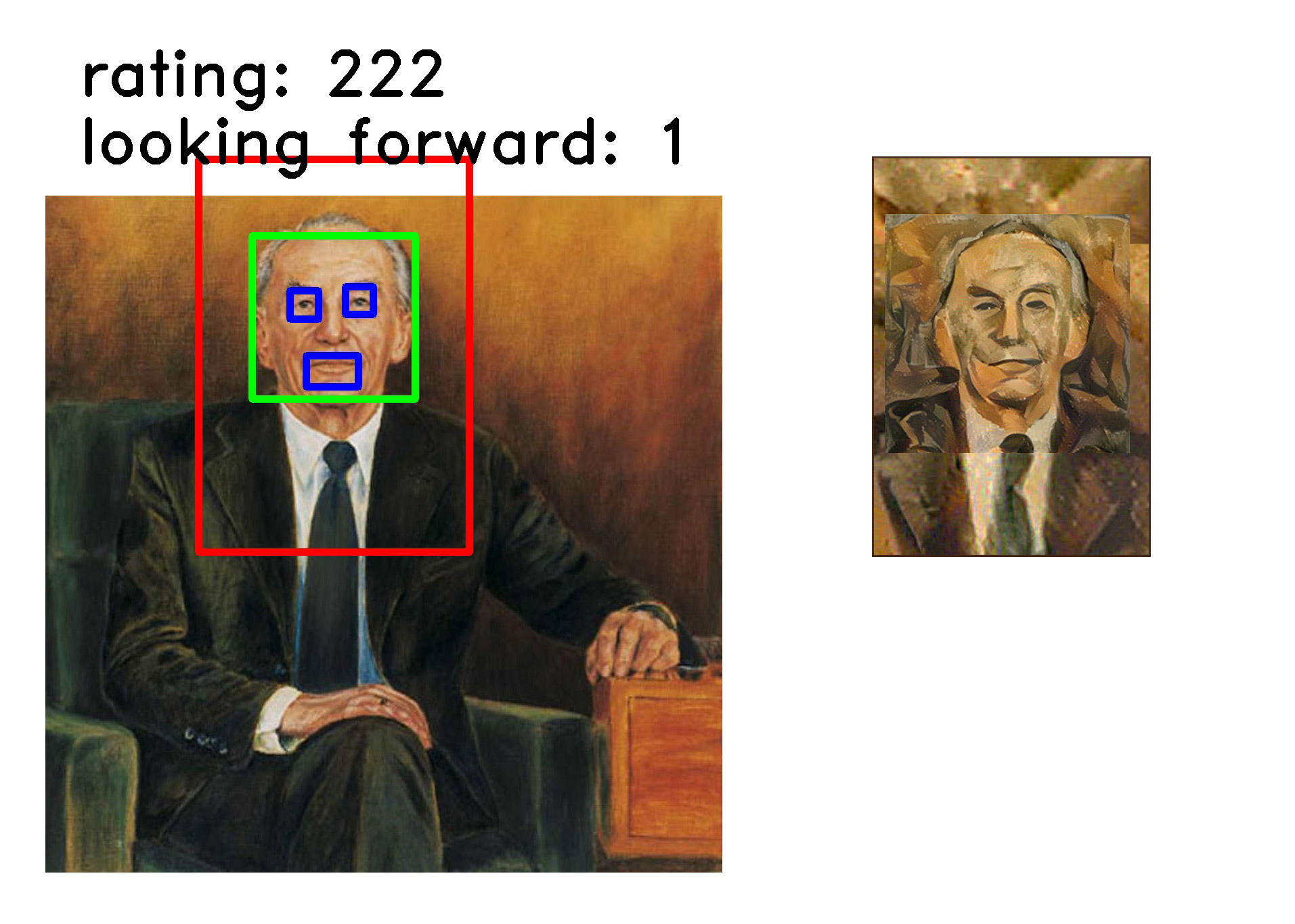

My latest robotic painting commission (seen above) began with a question and a challenge.

I was sent a couple portraits and several cubist works and asked

“whether style transfer works better or whether there are other ways of training the algorithm to repaint a picture analytically in a similar way that Picasso and Braque did when they started (i.e. dissecting a picture into basic geometrical shapes, getting more abstract with every round, etc.).”

It seemed obvious to me that the analytical approach would be better, if only for the reason that that was what the original artists had done. But I wasn’t sure and experimenting with this question sounded like a wonderful idea, so I dived into the portrait. I began by first using style transfer to make a grid of portraits as reimagined by neural networks.

The results were as could be expected, but regardless I always find it amazing how well cubist work lends itself to Style Transfer. This process works for some art styles and completely fails on others, but I have always found that it is particularly well suited for cubism.

With several style transfers completed, I began experimenting with a more analytical approach. The first process I began was to use hough lines to attempt to find lines and therefore shapes from both the original portraits and the neural network imagined ones.

The image above shows many of the attempts to find and define line patterns in both the original portraits and the cubist style transfers. I was expecting better results, but the information was at least useful…

It showed me which of the original six cubist style transfers had the most defined shapes and lines (right). It also gave my robots a frame of reference for how to paint their strokes when the time would come.

I was a little stumped though. I had expected better results, and my plan was to use those results to determine the path forward. I thought I could perhaps find shapes in the images, and then use the shapes to draw other shapes to create abstract portraits. But those shapes did not materialize from my algorithms. Frustrated and wondering where to go next, I put the images through many of my traditional AI algorithms including the viola-jones facial detection algorithm and some of my own beauty measuring processes.

My robots gathered and and studied hundreds of images using a variety of criteria. In the end it settled on the following composition and style. The major deciding factor for going with this composition was that it found it to be the most aesthetically pleasing of every variation it tested. The reason it picked the style was that it was the style that produced the richest set of analytical data. For example, the hough lines performed in the previous processes revealed more lines and shapes in this particular Picasso than any of the other style transfers. I expected that this would be useful later on in the process.

At this point I wanted to go forward but was still unsure of how to proceed. Fortunately artists have a time honored trick when they do not know what to do next. We just start painting. So I loaded all the models into one of my large format robots and set it to work. I figured the answer to what to do next would emerge as the painting was being made.

As the robot painted for a about a day, it did not make many aesthetic decisions. It just painted the general texture and shading of the portrait taking direction from the hough lines. As I was waiting for this sketch to finish, a second suggestion came from the commissioner of the portrait. I was asked:

“What if we tried to run a standard photo morphing process from one of the faces of the classical cubist work and see whether an interim-stage picture of this process could be a basis for the AI to work from?”

I had never done this before and it was an interesting thought so I gave it a shot. I found the results intriguing. Furthermore, it was fun to see the exact point in the morphing process at which the likeness disappears, and the face becomes a Braque painting.

Another interesting aspect of creating the morphing animation was that I was able to compare it to an animation of how the neural networks applied style transfer. For the comparison, both animation can be seen to the left.

Interesting comparison aside, I had a path forward. I showed my robots how to use the morphed image. For the second stage of the painting they worked towards completing the portrait in the style of a Braque.

I was really liking the results, however, there was one major problem. The portrait was too abstract. It no longer resembled the subject of the portrait. It had lost the likeness, and the one thing that every portrait should do is resemble the subject, at least a little. This portrait didn’t and I realized it needed another round to bring the likeness back.

To accomplish this I had the robot use both another round of style transfer and morphing to return to something that better resembled the original portrait. The robot had gone too abstract, so I was telling it to be more representational.

While the style transfer at this stage was automatic, the morphing required manual input from me. I had to define the edges of the various features in both the source abstract image and the style image. I tried to automate this step, but none of my algorithms could make enough sense of the abstract image. It didn’t know how to define things such as the background or location of the eyes. I will work on better approaches in the future, but for now I do not find it surprising that this is a weakness of my robot’s computer vision.

The final painting was completed over the course of a week by my largest robot. Below is a timelapse video of its completion…

The description I just gave about how my robots produced this has been an abbreviated outline of my entire generative AI art system. Several other algorithms and computer vision systems were used though I really only concentrated on describing the back and forth between Style Transfer and Morphing. The robot began by using neural networks to produce something in the style of a cubist Picasso, then morphed the image to imitate a Braque. Once things had gone too abstract, it finished by using both techniques to return to a more representational portrait. Back and forth and back again.

After the robot had completed the 12,382 strokes seen in the video, I touched it up by hand for a couple of minutes before applying a clear varnish. It is always relieving that while my robots can easily complete 99.9% of the thousands of strokes it applies, I am still needed for the final 0.1%. My job as an artists remains safe for now.

Pindar Van Arman

cloudpainter.com

AI as a Creative Tool - Art with the Assistance of Painting Robots

"AI as a Creative Tool - Art with the Assistance of Painting Robots" is what I am calling my recent talk at the Aspen Institute in Berlin. Just finished editing footage of the talk to include my slides. Enjoy...

Pindar

Emerging Faces - A Collaboration with 3D (aka Robert Del Naja of Massive Attack)

Have been working on and off for past several months with Bristol based artist 3D (aka Robert Del Naja, the founder of Massive Attack). We have been experimenting with applying GANs, CNNs, and many of my own artificial intelligent algorithms to his artwork. I have long been working at encapsulating my own artistic process in code. 3D and I are now exploring if we can capture parts of his artistic process.

It all started simply enough with looking at the patterns behind his images. We started creating mash-ups by using CNNs and Style Transfer to combine the textures and colors of his paintings with one another. It was interesting to see what worked and what didn't and to figure out what about each painting's imagery became dominant as they were combined

As cool as these looked, we were both left underwhelmed by the symbolic and emotional aspects of the mash-ups. We felt the art needed to be meaningful. All that was really be combined was color and texture, not symbolism or context. So we thought about it some more and 3D came up with the idea of trying to use the CNNs to paint portraits of historical figures that made significant contributions to printmaking. Couple of people came to mind as we bounced ideas back and forth before 3D suggested Martin Luther. At first I thought he was talking about Martin Luther King Jr, which left me confused. But then when I realized he was talking about the the author of The 95 Theses and it made more sense. Not sure if 3D realized I was confused, but I think I played it off well and he didn't suspect anything. We tried applying CNNs to Martin Luther's famous portrait and got the following results.

It was nothing all that great, but I made a couple of paintings from it to test things. Also tried to have my robots paint a couple of other new media figures like Mark Zuckerberg.

Things still were not gelling though. Good paintings, but nothing great. Then 3D and I decided to try some different approaches.

I showed him some GANs where I was working on making my robots imagine faces. Showed him how a really neat part of the GAN occurred right at the beginning when faces emerge from nothing. I also showed him a 5x5 grid of faces that I have come to recognize as a common visualization when implementing GANs in tutorials. We got to talking about how as a polyptych, it recalled a common Warhol trope except that there was something different. Warhol was all about mass produced art and how cool repeated images looked next to one another. But these images were even cooler, because it was a new kind of mass production. They were mass produced imagery made from neural networks where each image was unique.

I started having my GANs generate tens of thousands of faces. But I didn't want the faces in too much detail. I like how they looked before they resolved into clear images. It reminded me of how my own imagination worked when I tried to picture things in my mind. It is foggy and non descript. From there I tested several of 3D's paintings to see which would best render the imagined faces.

3D's Beirut (Column 2) was the most interesting, so I chose that one and put it and the GANs into the process that I have been developing over the past fifteen years. A simplified outline of the artificially creative process it became can be seen in the graphic below.

My robots would begin by having the GAN imagine faces. Then I ran the Viola-Jones face detection algorithm on the GAN images until it detected a face. At that point, right when the general outlines of faces emerged, I stopped the GAN. Then I applied a CNN Style Transfer on the nondescript faces to render them in the style of 3D's Beirut. Then my robots started painting. The brushstroke geometry was taken out of my historic database that contains the strokes of thousands of paintings, including Picassos, Van Goghs, and my own work. Feedback loops refined the image as the robot tried to paint the faces on 11"x14" canvases. All told, dozens of AI algorithms, multiple deep learning neural networks, and feedback loops at all levels started pumping out face after face after face.

Thirty-two original faces later it arrived at the following polyptych which I am calling the First Sparks of Artificial Creativity. The series itself is something I have begun to refer to as Emerging Faces. I have already made an additional eighteen based on a style transfer of my own paintings, and plan to make many more.

Above is the piece in its entirety as well as an animation of it working on an additional face at an installation in Berlin. You can also see a comparison of 3D's Beirut to some of the faces. An interesting aspect of the artwork, is that despite how transformative the faces are from the original painting, the artistic DNA of the original is maintained with those seemingly random red highlights.

It has been a fascinating collaboration to date. Looking forward to working with 3D to further develop many of the ideas we have discussed. Though this explanation may appear to express a lot of of artificial creativity, it only goes into his art on a very shallow level. We are always talking and wondering about how much deeper we can actually go.

Pindar