Using ElasticSearch AND BEATS

to CAPTURE Artistic Style

Using Beats and elasticsearch to model Artistic Style

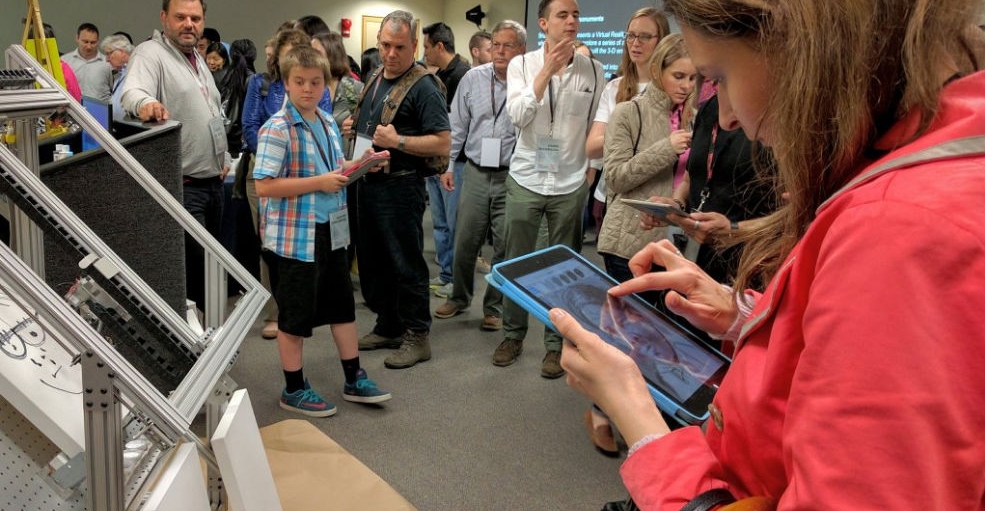

Have been making painting robots for about ten years now. These robot’s use a variety of AI and Machine Learning approaches to paint original compositions with an artist’s brush on stretched canvas. - And not just abstract work, but paintings in a variety of genres including portraiture. We have recently developed a novel way of using Elasticsearch and Beats to enhance the stylistic capabilities of our painting robots that we would love to share at Elastic{on} 2017.

The paintings we create together have gotten a lot of attention recently including awards and recognition from Google, TEDx, Microsoft, The Barbican, SXSL at The White House, robotart.org, and NPR. A longer list can be found at www.cloudpainter.com/press though the quickest way to get up to speed is to check out this video.

Despite all this recent success, there is a major systematic problem with the artwork we are creating. While aesthetically pleasing, the individual brushstrokes themselves lack artistic style. They are noticeably mechanical and repetitive.

For the next iteration of my painting robot (the sixth), we have found a solution to the problem of these “soulless” brushstrokes. By leveraging Beats and ElasticSearch, we have found a way analyze and model human style brushstrokes. While this may not seem like an important cause in the traditional sense, capturing and emulating human creativity is one of the holy grails of artificial intelligence research. In fact this project will rely heavily on recent state-of-the-art research by Google Brain and their ongoing success at creating pastiches - where Google’s deep learning algorithms are painting photographs in the style of a famous artists. If you have not seen a one of Google's pastiches, here is an example taken from their blog of several photos painted in the style of several artists.

Taken from https://research.googleblog.com/2016/10/supercharging-style-transfer.html

The results are amazing, but when you look more closely you see a systematic problem that is similar to the problem our robots have.

While Vincent Dumoulin, Jonathon Shlens, and Majunath Kudlar of the Google Brain Team have done an amazing job of transferring the color and texture from the original painting, they did not really capture the style of the brushstrokes. I offer this critique despite being deeply indebted to their work and a big fan of what they are accomplishing. But this is an acknowledged limitation of their deep learning algorithms. Brad Pitt's face does not have the swooping strokes of the face in Munch's The Scream. The Golden Gate bridge is overly detailed when in the painting it is composed of long stark strokes. What these pastiches have done, while amazing, do not capture Munch's brushstroke. This is a problem because ultimately The Scream is a painting where the style of the brushstroke is a major contributor to the aesthetic effect.

how to capture An Artist's brushstroke?

A couple of years ago we realized that we had the data required to capture and model artistic brushstrokes. It wasn't until we started experimenting with Beats, however, did we realize just how good this data was and how well it could be used to learn artistic style from a human operator.

First thing to understand about our painting robots is that they can paint completely on their own or take instructions from a human operator through a touchscreen interface. Wherever the brushstrokes come from, they are recorded in an Elasticsearch index before being dispatched to the robot controller which then used a brush to apply paint to a canvas. A typical document contains color, a stroke vector, distance, time, and whether brushstroke came from AI or human operator. Every stroke is recorded and used by the robot's AI to track progress and make decisions.

Over the past several years we have collected brushstroke metrics for hundreds of paintings, some in SQL and some in Elasticsearch, millions upon millions of brushstrokes. When you analyze the metrics of the AI generated brushstrokes, they are uniform and repetitive. Human generated strokes, on the other hand, are all over the place. After examining these metrics, it was realized that Beats and Elasticsearch could be leveraged to analyze and model how humans were applying the brushstrokes. In turn, this model could be used in the creation of the AI brushstrokes to make them less repetitive, and more stylistic. In essence, Elasticsearch and Beats are being used to try and capture the cadence and rhythm of human artists, and deep learning is being applied in an attempt to learn how to make the brushstrokes and create paintings with a similar feel. If a human operator applies swirling strokes, like Van Gogh, the robot will paint with swirling strokes. If the strokes are quick and choppy, the robot will learn to paint with quick choppy strokes. If deep learning reveals that a human artist begins artwork with long smooth strokes and ends with short chaotic ones, the robot can and will learn to emulate that pattern as well. It is all in the data.

Furthermore, as we played and experimented with the data, it was also realized that Beats and Elasticsearch could record metrics efficiently enough to emulate the style of the human generated strokes in real time. To take advantage of this, two robotic arms were recently installed, one to be completely controlled by a human operator, and a second to paint strokes being generated from a deep learning analysis of the human operation. This part of project is in very early stages of development, though it should be complete in about a month.

While we know where things will be a month from now, this project is doing its best to follow the most recent advances in AI and Deep Learning. As such it is difficult to say where it will be beyond a couple months from now, let alone next couple of years. AI research is progressing so quickly that I already feel my TEDx Talk from 6 months ago is obsolete. All I know is that we will be working on it. While we are being flexible with our vision of the projects future, we do think one thing will stay constant, and that is the need to capture any and all data about the the creation of a painting. Even if we don’t understand the data’s meaning today, it may become more clear as AI driven technology advances.

Since Beats and Elasticsearch make capturing the data and metrics so straightforward, we foresee using it long into the future, whatever path the rest of the project takes.